Tidy datasets are all alike, but every messy dataset is messy in its own way.

–Hadley Wickham (cf. Leo Tolstoy)

A great deal of data both does and should live in tabular formats; to put it flatly, this means formats that have rows and columns. In a theoretical sense, it is possible to represent every collection of structured data in terms of multiple "flat" or "tabular" collections if we also have a concept of relations. Relational database management systems (RDBMS) have had a great deal of success since 1970, and a very large part of all the world's data lives in RDBMS's. Another large share lives in formats that are not relational as such, but that are nonetheless tabular, wherein relationships may be imputed in an ad hoc, but uncumbersome, way.

As the Preface mentioned, the data ingestion chapters will concern themselves chiefly with structural or mechanical problems that make data dirty. Later in the book we will focus more on content or numerical issues in data.

This chapter discusses tabular formats including CSV, spreadsheets, SQL databases, and scientific array storage formats. The last sections look at some general concepts around data frames, which will typically be how data scientists manipulate tabular data. Much of this chapter is concerned with the actual mechanics of ingesting and working with a variety of data formats, using several different tools and programming languages. The Preface discusses why I wish to remain language agnostic—or multilingual—in my choices. Where each format is prone to particular kinds of data integrity problems, special attention is drawn to that. Actually remediating those characteristic problems is largely left until later chapters; detecting them is the focus of our attention here.

As The Hitchhiker's Guide to the Galaxy is humorously inscribed: "Don't Panic!" We will explain in much more detail the concepts mentioned here.

We run the setup code that will be standard throughout this book. As the Preface mentions, each chapter can be

run in

full, assuming available configuration files have been utilized. The prompts you see throughout this book, such

as

In [1]: and

practice in Python to use import *, we do so here to bring in many names without a long block of imports.

from src.setup import *

%load_ext rpy2.ipython

%%capture --no-stdout err %%R library(tidyverse)

With our various Python and R libraries now available, let us utilize them to start cleaning data.

After every war someone has to tidy up.

–Maria Wisława Anna Szymborska

Concepts:

Hadley Wickham and Garrett Grolemund, in their excellent and freely available book, [R for Data Science](https://r4ds.had.co.nz/), promote the concept of "tidy data." The Tidyverse collection of R packages attempt to realize this concept in concrete libraries. Wickham and Grolemund's idea of tidy data has a very close intellectual forebearer in the concept of database normalization, which is a large topic addressed in depth neither by them nor in this current book. The canonical reference on database normalization is C. J. Date's An Introduction to Database Systems (Addison Wesley; 1975 and numerous subsequent editions).

In brief, tidy data carefully separates variables (the columns of a table; also called features or fields) from observations (the rows of a table; also called samples). At the intersection of these two, we find values, one data item (datum) in each cell. Unfortunately, the data we encounter is often not arranged in this useful way, and it requires normalization. In particular, what are really values are often represented either as columns or as rows instead. To demonstrate what this means, let us consider an example.

Returning to the small elementary school class we presented in the Preface, we might encounter data looking like this:

students = pd.read_csv('data/students-scores.csv')

students

| Last Name | First Name | 4th Grade | 5th Grade | 6th Grade | |

|---|---|---|---|---|---|

| 0 | Johnson | Mia | A | B+ | A- |

| 1 | Lopez | Liam | B | B | A+ |

| 2 | Lee | Isabella | C | C- | B- |

| 3 | Fisher | Mason | B | B- | C+ |

| 4 | Gupta | Olivia | B | A+ | A |

| 5 | Robinson | Sophia | A+ | B- | A |

This view of the data is easy for humans to read. We can see trends in the scores each student received over several years of education. Moreover, this format might lend itself to useful visualizations fairly easily.

# Generic conversion of letter grades to numbers

def num_score(x):

to_num = {'A+': 4.3, 'A': 4, 'A-': 3.7,

'B+': 3.3, 'B': 3, 'B-': 2.7,

'C+': 2.3, 'C': 2, 'C-': 1.7}

return x.map(lambda x: to_num.get(x, x))

This next cell uses a "fluent" programming style that may look unfamiliar to some Python programmers. I discuss this style in the section below on data frames. The fluent style is used in many data science tools and languages. For example, this is typical Pandas code that plots the students' scores by year.

(students

.set_index('Last Name')

.drop('First Name', axis=1)

.apply(num_score)

.T

.plot(title="Student score by year")

.legend(bbox_to_anchor=(1, .75))

)

plt.savefig("img/(Ch01)Student score by year");

This data layout exposes its limitations once the class advances to 7th grade, or if we were to obtain 3rd grade information. To accommodate such additional data, we would need to change the number and position of columns, not simply add additional rows. It is natural to make new observations or identify new samples (rows), but usually awkward to change the underlying variables (columns).

The particular class level (e.g. 4th grade) that a letter grade pertains to is, at heart, a value not a variable. Another way to think of this is in terms of independent variables versus dependent variables. Or in machine learning terms, features versus target. In some way, the class level might correlate with or influence the resulting letter grade; perhaps the teachers at the different levels have different biases, or children of a certain age lose or gain interest in schoolwork, for example.

For most analytic purposes, this data would be more useful if we make it tidy (normalized) before further

processing.

In Pandas, the DataFrame.melt() method can perform this tidying. We pin some of the columns as

id_vars, and we set a name for the combined columns as a variable and the letter grade as a single

new

column. This Pandas method is slightly magical, and takes some practice to get used to. The key thing is that it

preserves data, simply moving it between column labels and data values.

students.melt(

id_vars=["Last Name", "First Name"],

var_name="Level",

value_name="Score"

).set_index(['First Name', 'Last Name', 'Level'])

| Score | |||

|---|---|---|---|

| First Name | Last Name | Level | |

| Mia | Johnson | 4th Grade | A |

| Liam | Lopez | 4th Grade | B |

| Isabella | Lee | 4th Grade | C |

| Mason | Fisher | 4th Grade | B |

| ... | ... | ... | ... |

| Isabella | Lee | 6th Grade | B- |

| Mason | Fisher | 6th Grade | C+ |

| Olivia | Gupta | 6th Grade | A |

| Sophia | Robinson | 6th Grade | A |

18 rows × 1 columns

In the R Tidyverse, the procedure is similar. Do not worry about the extra Jupyter magic

%%capture, this

simply quiets extra informational messages sent to STDERR that would distract from the printed book. A

tibble that we see here is simply a kind of data frame that is preferred in the Tidyverse.

%%capture --no-stdout err

%%R

library('tidyverse')

studentsR <- read_csv('data/students-scores.csv')

studentsR

── Column specification ────────────────────────────────────────────────────────────────── cols( `Last Name` = col_character(), `First Name` = col_character(), `4th Grade` = col_character(), `5th Grade` = col_character(), `6th Grade` = col_character() ) # A tibble: 6 x 5 `Last Name` `First Name` `4th Grade` `5th Grade` `6th Grade` <chr> <chr> <chr> <chr> <chr> 1 Johnson Mia A B+ A- 2 Lopez Liam B B A+ 3 Lee Isabella C C- B- 4 Fisher Mason B B- C+ 5 Gupta Olivia B A+ A 6 Robinson Sophia A+ B- A

Within the Tidyverse, specifically within the tidyr package, there is a function

pivot_longer() that is similar to Pandas' .melt(). The aggregation names and values

have

parameters spelled names_to and values_to, but the operation is the same.

%%capture --no-stdout err

%%R

studentsR <- read_csv('data/students-scores.csv')

studentsR %>%

pivot_longer(c(`4th Grade`, `5th Grade`, `6th Grade`),

names_to = "Level",

values_to = "Score")

── Column specification ────────────────────────────────────────────────────────────────── cols( `Last Name` = col_character(), `First Name` = col_character(), `4th Grade` = col_character(), `5th Grade` = col_character(), `6th Grade` = col_character() ) # A tibble: 18 x 4 `Last Name` `First Name` Level Score <chr> <chr> <chr> <chr> 1 Johnson Mia 4th Grade A 2 Johnson Mia 5th Grade B+ 3 Johnson Mia 6th Grade A- 4 Lopez Liam 4th Grade B 5 Lopez Liam 5th Grade B 6 Lopez Liam 6th Grade A+ 7 Lee Isabella 4th Grade C 8 Lee Isabella 5th Grade C- 9 Lee Isabella 6th Grade B- 10 Fisher Mason 4th Grade B 11 Fisher Mason 5th Grade B- 12 Fisher Mason 6th Grade C+ 13 Gupta Olivia 4th Grade B 14 Gupta Olivia 5th Grade A+ 15 Gupta Olivia 6th Grade A 16 Robinson Sophia 4th Grade A+ 17 Robinson Sophia 5th Grade B- 18 Robinson Sophia 6th Grade A

The simple example above gives you a first feel for tidying tabular data. To reverse the tidying operation that

moves

variables (columns) to values (rows), the pivot_wider() function in tidyr can be used. In Pandas

there

are several related methods on DataFrames, including .pivot(), .pivot_table(), and

.groupby() combined with .unstack(), which can create columns from rows (and do many

other

things too).

Having looked at the idea of tidyness as a general goal for tabular, let us being looking at specific data formats, beginning with comma-separated values and fixed-width files.

Speech sounds cannot be understood, delimited, classified and explained except in the light of the tasks which they perform in language.

–Roman Jakobson

Concepts:

Delimited text files, especially comma-separated values (CSV) files, are ubiquitous. These are text files that put multiple values on each line, and separate those values with some semi-reserved character, such as a comma. They are almost always the exchange format used to transport data between other tabular representations, but a great deal of data both starts and ends life as CSV, perhaps never passing through other formats.

Reading delimited files is not the fastest way of reading data from disk into RAM memory, but it is also not the slowest. Of course, that concern only matters for large-ish data sets, not for the small data sets that make up most of our work as data scientists (small nowadays means roughly "fewer then 100k rows").

There are a great number of deficits in CSV files, but also some notable strengths. CSV files are the format second most susceptible to structural problems. All formats are generally equally prone to content problems, which are not tied to the format itself. Spreadsheets like Excel are, of course, by a very large margin the worst format for every kind of data integrity concern.

At the same time, delimited formats—or fixed-width text formats—are also almost the only ones you can easily open and make sense of in a text editor or easily manipulate using command-line tools for text processing. Thereby delimited files are pretty much the only ones you can fix fully manually without specialized readers and libraries. Of course, formats that rigorously enforce structural constraints do avoid some of the need to do this. Later in this chapter, and in the next two chapters, a number of formats that enforce structure more are discussed.

One issue that you could encounter in reading CSV or other textual files is the actual character set encoding may not be the one you expect, or that is the default on your current system. In this age of Unicode, this concern is diminishing, but only slowly, and archival files continue to exist. This topic is discussed in chapter 3 (Data Ingestion – Repurposing Data Sources) in the section Custom Text Formats.

As a quick example, suppose you have just received a medium sized CSV file, and you want to make a quick sanity check on it. At this stage, we are concerned about whether the file is formatted correctly at all. We can do this with command-line tools, even if most libraries are likely to choke on them (such as shown in the next code cell). Of course, we could also use Python, R, or another general-purpose language if we just consider the lines as text initially.

# Use try/except to avoid full traceback in example

try:

pd.read_csv('data/big-random.csv')

except Exception as err:

print_err(err)

ParserError Error tokenizing data. C error: Expected 6 fields in line 75, saw 8

What went wrong there? Let us check.

%%bash # What is the general size/shape of this file? wc data/big-random.csv

100000 100000 4335846 data/big-random.csv

Great! 100,000 rows; but there is some sort of problem on line 75 according to Pandas (and perhaps on other lines as well). Using a single piped bash command which counts commas per line might provide insight. We could absolutely perform this same analysis in Python, R, or other languages; however, being familiar with command-line tools is a benefit to data scientists in performing one-off analyses like this.

%%bash

cat data/big-random.csv |

tr -d -c ',\n' |

awk '{ print length; }' |

sort |

uniq -c

46 3

99909 5

45 7

So we have figured out already that 99,909 of the lines have the expected 5 commas. But 46 have a deficit and 45 a surplus. Perhaps we will simply discard the bad lines, but that is not altogether too many to consider fixing by hand, even in a text editor. We need to make a judgement, on a per problem basis, about both the relative effort and reliability of automation of fixes versus manual approaches. Let us take a look at a few of the problem rows.

%%bash

grep -C1 -nP '^([^,]+,){7}' data/big-random.csv | head

74-squarcerai,45,quiescenze,12,scuoieremo,70 75:fantasmagorici,28,immischiavate,44,schiavizzammo,97,sfilzarono,49 76-interagiste,50,repentagli,72,attendato,95 -- 712-resettando,58,strisciato,46,insaldai,62 713:aspirasse,15,imbozzimatrici,70,incanalante,93,succhieremo,41 714-saccarometriche,18,stremaste,12,hindi,19 -- 8096-squincio,16,biascicona,93,solisti,70 8097:rinegoziante,50,circoncidiamo,83,stringavate,79,stipularono,34

Looking at these lists of Italian words and integers of slightly varying number of fields does not immediately illuminate the nature of the problem. We likely need more domain or problem knowledge. However, given that fewer than 1% of rows are a problem, perhaps we should simply discard them for now. If you do decide to make a modification such as removing rows, then versioning the data, with accompanying documentation of change history and reasons, becomes crucial to good data and process provenance.

This next cell uses a regular expression to filter the lines in the "almost CSV" file. The pattern may appear

confusing, but regular expressions provide a compact way of describing patterns in text. The match in

pat

indicates that from beginning of a line (^) until the end of that line ($) there are

exactly

five repetitions of character sequences that do not include commas, each followed by one comma

([^,]+,).

import re

pat = re.compile(r'^([^,]+,){5}[^,]*$')

with open('data/big-random.csv') as fh:

lines = [l.strip().split(',')

for l in fh if re.match(pat, l)]

pd.DataFrame(lines)

| 0 | 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|---|

| 0 | infilaste | 21 | esemplava | 15 | stabaccavo | 73 |

| 1 | abbadaste | 50 | enartrosi | 85 | iella | 54 |

| 2 | frustulo | 77 | temporale | 83 | scoppianti | 91 |

| 3 | gavocciolo | 84 | postelegrafiche | 93 | inglesizzanti | 63 |

| ... | ... | ... | ... | ... | ... | ... |

| 99905 | notareschi | 60 | paganico | 64 | esecutavamo | 20 |

| 99906 | rispranghiamo | 11 | schioccano | 44 | imbozzarono | 80 |

| 99907 | compone | 85 | disfronderebbe | 19 | vaporizzavo | 54 |

| 99908 | ritardata | 29 | scordare | 43 | appuntirebbe | 24 |

99909 rows × 6 columns

In the code we managed, within Python, to read all rows without formatting problems. We could also have used

the

pd.read_csv() parameter error_bad_lines=False to achieve the same effect, but walking

through it in plain Python and Bash gives you a better picture of why they are excluded.

Let us return to some virtues and deficits of CSV files. Here when we mention CSV, we really mean any kind of

delimited file. And specifically, text files that store tabular data nearly always use a single character for a

delimiter, and end rows/records with a newline (or carriage return and newline in legacy formats). Other than

commas,

probably the most common delimiters you will encounter are tabs and the pipe character |. However,

nearly

all tools are more than happy to use an arbitrary character.

Fixed-width files are similar to delimited ones. Technically they are different in that, although they are line oriented, they put each field of data in specific character positions within each line. An example is used in the next code cell below. Decades ago, when Fortran and Cobol were more popular, fixed-width formats were more prevalent; my perception is that their use has diminished in favor of delimited files. In any case, fixed-width textual data files have most of the same pitfalls and strengths as do delimited ones.

The Bad

Columns in delimited or flat files do not carry a data type, being simply text values. Many tools will (optionally) make guesses about the data type, but these are subject to pitfalls. Moreover, even where the tools accurately guess the broad type category (i.e. string vs. integer vs. real number) they cannot guess the specific bit length desired, where that matters.

Likewise, the representation used for "real" numbers is not encoded—most systems deal with IEEE-754 floating-point numbers of some length, but occasionally decimals of some specific length are more appropriate for a purpose.

The most typical way that type inference goes wrong is where the initial records in some data set have an apparent pattern, but later records deviate from this. The software library may infer one data type but later encounter strings that cannot be cast as such. "Earlier" and "later" here can have several different meanings.

For out-of-core data frame libraries like Vaex and Dask (Python libraries) that read lazily, type heuristics might be applied to a first few records (and perhaps some other sampling) but will not see those strings that do not follow the assumed pattern. However, later might also mean months later, when new data arrives.partnum

Most data frame libraries are greedy about inferring data types—although all will allow manual specification to shortcut inference.

For many layouts, data frame libraries can guess a fixed-width format and infer column positions and data types

(where it cannot guess, we could manually specify). But the guesses about data types can go wrong. For example,

viewing the raw text, we see a fixed-width layout in parts.fwf.

%%bash cat data/parts.fwf

Part_No Description Maker Price (USD) 12345 Wankle rotary engine Acme Corporation 555.55 67890 Sousaphone Marching Inc. 333.33 2468 Feather Duster Sweeps Bros 22.22 A9922 Area 51 metal fragment No Such Agency 9999.99

Reading in a few rows of data:

Reading this with Pandas correctly infers the intended column positions for the fields.

df = pd.read_fwf('data/parts.fwf', nrows=3)

df

| Part_No | Description | Maker | Price (USD) | |

|---|---|---|---|---|

| 0 | 12345 | Wankle rotary engine | Acme Corporation | 555.55 |

| 1 | 67890 | Sousaphone | Marching Inc. | 333.33 |

| 2 | 2468 | Feather Duster | Sweeps Bros | 22.22 |

df.dtypes

Part_No int64 Description object Maker object Price (USD) float64 dtype: object

We deliberately only read the start of the parts.fwf file. From those first few rows, Pandas made

a type

inference of int64 for the Part_No column.

Let us read the entire file. Pandas does the "right thing" here: Part_No becomes a generic object,

i.e.

string. However, if we had a million rows instead, and the heuristics Pandas uses, for speed and memory

efficiency,

happened to limit inference to the first 100,000 rows, we might not be so lucky.

df = pd.read_fwf('data/parts.fwf')

df

| Part_No | Description | Maker | Price (USD) | |

|---|---|---|---|---|

| 0 | 12345 | Wankle rotary engine | Acme Corporation | 555.55 |

| 1 | 67890 | Sousaphone | Marching Inc. | 333.33 |

| 2 | 2468 | Feather Duster | Sweeps Bros | 22.22 |

| 3 | A9922 | Area 51 metal fragment | No Such Agency | 9999.99 |

df.dtypes # type of `Part_No` changed

Part_No object Description object Maker object Price (USD) float64 dtype: object

R tibbles behave the same as Pandas, with the minor difference that data type imputation always uses 1000 rows (and will discard values if inconsistencies occur thereafter). Pandas can be configured to read all rows for inference, but by default reads a dynamically determined number. Pandas will sample more rows than R does, but still only approximately tens of thousands. The R collections data.frame and data.table are likewise similar. Let us reading the same file as above using R.

%%capture --no-stdout err

%%R

read_table('data/parts.fwf')

── Column specification ────────────────────────────────────────────────────────────────── cols( Part_No = col_character(), Description = col_character(), Maker = col_character(), `Price (USD)` = col_double() ) # A tibble: 4 x 4 Part_No Description Maker `Price (USD)` <chr> <chr> <chr> <dbl> 1 12345 Wankle rotary engine Acme Corporation 556. 2 67890 Sousaphone Marching Inc. 333. 3 2468 Feather Duster Sweeps Bros 22.2 4 A9922 Area 51 metal fragment No Such Agency 10000.

Again, the first three rows are consistent with an integer data type, although this is inaccurate for later rows.

%%R

read_table('data/parts.fwf',

n_max = 3,

col_types = cols("i", "-", "f", "n"))

# A tibble: 3 x 3

Part_No Maker `Price (USD)`

<int> <fct> <dbl>

1 12345 Acme Corporation 556.

2 67890 Marching Inc. 333.

3 2468 Sweeps Bros 22.2

Delimited files—but not so much fixed-width files—are prone to escaping issues. In particular, CSVs specifically often contain descriptive fields that sometimes contain commas within the value itself. When done right, this comma should be escaped. It is often not done right in practice.

CSV is actually a family of different dialects, mostly varying in their escaping conventions. Sometimes spacing before or after commas is treated differently across dialects as well. One approach to escaping is to put quotes around either every string value, or every value of any kind, or perhaps only those values that contain the prohibited comma. This varies by tool and by the version of the tool. Of course, if you quote fields, there is potentially a need to escape those quotes; usually this is done by placing a backslash before the quote character when it is part of the value.

An alternate approach is to place a backslash before those commas that are not intended as a delimeter but

rather

part of a string value (or numeric value that might be formatted, e.g. $1,234.56). Guessing the

variant

can be a mess, and even single files are not necessarily self consistent between rows, in practice (often

different

tools or versions of tools have touched the data).

Tab-separated and pipe-separated formats are often chosen with the hope of avoiding escaping issues. This works

to an

extent. Both tabs and pipe symbols are far less common in ordinary prose. But both still wind up occurring in

text

occasionally, and all the escaping issues come back. Moreover, in the face of escaping, the simplest tools

sometimes

fail. For example, the bash command cut -d, will not work in these cases, nor will Python's

str.split(','). A more custom parser becomes necessary, albeit a simple one compared to

full-fledged

grammars. Python's standard library csv module is one such custom parser.

The corresponding danger for fixed-width files, in contrast to delimited ones, is that values become too long. Within a certain line position range you can have any codepoints whatsoever (other than newlines). But once the description or name that someone thought would never be longer than, say, 20 characters becomes 21 characters, the format fails.

A special consideration arises around reading datetime formats. Data frame libraries that read datetime values typically have an optional switch to parse certain columns as datetime formats. Libraries such as Pandas support heuristic guessing of datetime formats; the problem here is that applying a heuristic to each of millions of rows can be exceedingly slow. Where a date format is uniform, using a manual format specifier can make it several orders of magnitude faster to read. Of course, where the format varies, heuristics are practically magic; and perhaps we should simply marvel that the dog can talk at all rather than criticize its grammar. Let us look at a Pandas' attempt to guess datetimes for each row of a tab-separated file.

%%bash # Notice many date formats cat data/parts.tsv

Part_No Description Date Price (USD) 12345 Wankle rotary 2020-04-12T15:53:21 555.55 67890 Sousaphone April 12, 2020 333.33 2468 Feather Duster 4/12/2020 22.22 A9922 Area 51 metal 04/12/20 9999.99

# Let Pandas make guesses for each row

# VERY SLOW for large tables

parts = pd.read_csv('data/parts.tsv',

sep='\t', parse_dates=['Date'])

parts

| Part_No | Description | Date | Price (USD) | |

|---|---|---|---|---|

| 0 | 12345 | Wankle rotary | 2020-04-12 15:53:21 | 555.55 |

| 1 | 67890 | Sousaphone | 2020-04-12 00:00:00 | 333.33 |

| 2 | 2468 | Feather Duster | 2020-04-12 00:00:00 | 22.22 |

| 3 | A9922 | Area 51 metal | 2020-04-12 00:00:00 | 9999.99 |

We can verify that the dates are genuinely a datetime data type within the DataFrame.

parts.dtypes

Part_No object Description object Date datetime64[ns] Price (USD) float64 dtype: object

We have looked at some challenges and limitations of delimited and fixed-width formats, let us consider their considerable advantages as well.

The Good

The biggest strength of CSV files, and their delimited or fixed-width cousins, is the ubiquity of tools to read

and

write them. Every library dealing with data frames or arrays, across every programming language, knows how to

handle

them. Most of the time the libraries parse the quirky cases pretty well. Every spreadsheet program imports and

exports

as CSV. Every RDBMS—and most non-relational databases as well—imports and exports as CSV. Most programmers' text

editors even have facilities to make editing CSV easier. Python has a standard library module csv

that

processes many dialects of CSV (or other delimited formats) as a line-by-line record reader.

The fact that so very many structurally flawed CSV files live in the wild shows that not every tool

handles

them entirely correctly. In part, that is probably because the format is simple enough to almost work

without

custom tools at all. I have myself, in a "throw-away script," written

print(",".join([1,2,3,4]), file=csv) countless times; that works well, until it doesn't. Of course,

throw-away scripts become fixed standard procedures for data flow far too often.

The lack of type specification is often a strength rather than a weakness. For example, the part numbers mentioned a few pages ago may have started out always being integers as an actual business intention, but later on a need arose to use non-integer "numbers." With formats that have a formal type specifier, we generally have to perform a migration and copy to move old data into a new format that follows the loosened or revised constraints.

One particular case where a data type change happens especially often, in my experience, is with finite-width character fields. Initially some field is specified as needing 5, or 15, or 100 characters for its maximum length, but then a need for a longer string is encountered later, and a fixed table structure or SQL database needs to be modified to accommodate the longer length. Even more often—especially with databases—the requirement is underdocumented, and we wind up with a data set filled with truncated strings that are of little utility (and perhaps permanently lost data).

Text formats in general are usually flexible in this regard. Delimited files—but not fixed-width files—will happily contain fields of arbitrary length. This is similarly true of JSON data, YAML dataconfig, XML data, log files, and some other formats that simply utilize text, often with line-oriented records. In all of this, data typing is very loose and only genuinely exists in the data processing steps. That is often a great virtue.

A related "looseness" of CSV and similar formats is that we often indefinitely aggregate multiple CSV files that follow the same informal schema. Writing a different CSV file for each day, or each hour, or each month, of some ongoing data collection is very commonplace. Many tools, such as Dask and Spark will seamlessly treat collections of CSV files (matching a glob pattern on the file system) as a single data set. Of course, in tools that do not directly support this, manual concatenation is still not difficult. But under the model of having a directory that contains an indefinite number of related CSV snapshots, presenting it as a single common object is helpful.

The libraries that handle families of CSV files seamlessly are generally lazy and distributed. That is, with these tools, you do not typically read in all the CSV files at once, or at least not into the main memory of a single machine. Rather, various cores or various nodes in a cluster will each obtain file handles to individual files, and the schema information will be inferred from only one or a few of the files, with actual processing deferred until a specific (parallel) computation is launched. Splitting processing of an individual CSV file across cores is not easily tractable since a reader can only determine where a new record begins by scanning until it finds a newline.

While details of the specific APIs of libraries for distributed data frames is outside the scope of this book, the fact that parallelism is easily possible given an initial division of data into many files is a significant strength for CSV as a format. Dask in particular works by creating many Pandas DataFrames and coordinating computation upon all of them (or those needed for a given result) with an API that exactly copies the same methods of individual Pandas objects.

# Generated data files with random values

from glob import glob

# Use glob() function to identify files matching pattern

glob('data/multicsv/2000-*.csv')[:8] # ... and more

['data/multicsv/2000-01-27.csv', 'data/multicsv/2000-01-26.csv', 'data/multicsv/2000-01-06.csv', 'data/multicsv/2000-01-20.csv', 'data/multicsv/2000-01-13.csv', 'data/multicsv/2000-01-22.csv', 'data/multicsv/2000-01-21.csv', 'data/multicsv/2000-01-24.csv']

We read this family of CSV files into one virtualized DataFrame that acts like a Pandas DataFrame, even if loading it with Pandas would require more memory than our local system allows. In this specific example, the collection of CSV files is not genuinely too large for a modern workstation to read into memory; but when it becomes so, using some distributed or out-of-core system like Dask is necessary to proceed at all.

import dask.dataframe as dd

df = dd.read_csv('data/multicsv/2000-*-*.csv',

parse_dates=['timestamp'])

print("Total rows:", len(df))

df.head()

Total rows: 2592000

| timestamp | id | name | x | y | |

|---|---|---|---|---|---|

| 0 | 2000-01-01 00:00:00 | 979 | Zelda | 0.802163 | 0.166619 |

| 1 | 2000-01-01 00:00:01 | 1019 | Ingrid | -0.349999 | 0.704687 |

| 2 | 2000-01-01 00:00:02 | 1007 | Hannah | -0.169853 | -0.050842 |

| 3 | 2000-01-01 00:00:03 | 1034 | Ursula | 0.868090 | -0.190783 |

| 4 | 2000-01-01 00:00:04 | 1024 | Ingrid | 0.083798 | 0.109101 |

When we require some summary to be computed, Dask will coordinate workers to aggregate on each individual DataFrame, then aggregate those aggregations. There are more nuanced issues of what operations can be reframed in this "map-reduce" style and which cannot, but that is the general idea (and the Dask or Spark developers have thought about this for you so you do not have to).

df.mean().compute()

id 999.965606 x 0.000096 y 0.000081 dtype: float64

Having looked at some pros and cons of working with CSV data, let us turn to another format where a great deal of data is stored. Unfortunately, for spreadsheets, there are almost exclusively cons.

Drugs are bad. m'kay. You shouldn't do drugs. m'kay. If you do them you're bad, because drugs are bad. m'kay. It's a bad thing to do drugs, so don't be bad by doing drugs. m'kay.

–Mr. Mackay (South Park)

Concepts:

Edward Tufte, that brilliant doyen of information visualization, wrote an essay called The Cognitive Style of Powerpoint: Pitching Out Corrupts Within. Amongst his observations is that the manner in which slide presentations, and Powerpoint specifically, hides important information more than it reveals it, was a major or even main cause of the 2003 Columbia space shuttle disaster. Powerpoint is anathema to clear presentation of information.

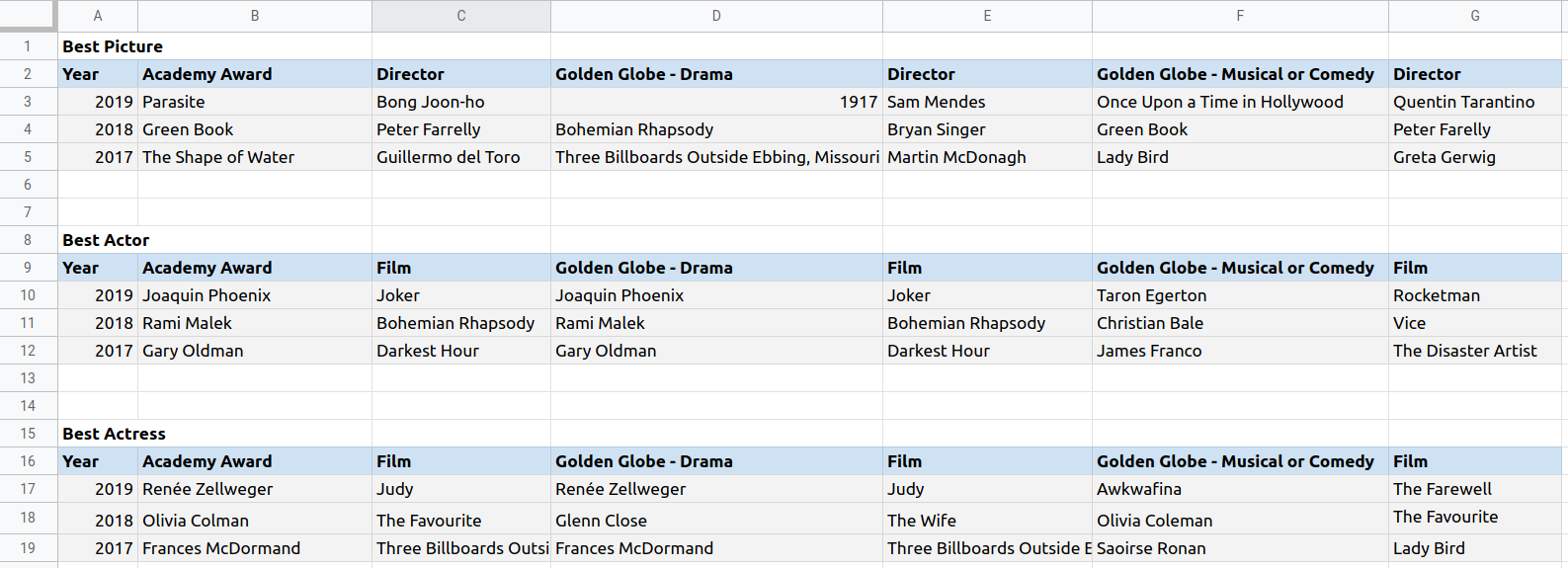

To no less of a degree, spreadsheets in general, and Excel in particular, are anathema to effective data science. While perhaps not as much as in CSV files, a great share of the world's data lives in Excel spreadsheets. There are numerous kinds of data corruption that are the special realm of spreadsheets. As a bonus, data science tools read spreadsheets much more slowly than they do every other format, while spreadsheets also have hard limits on the amount of data they can contain that other formats do not impose.

Most of what spreadsheets do to make themselves convenient for their users makes them bad for scientfic reproducibility, data science, statistics, data analysis, and related areas.computation Spreadsheets have apparent rows and columns in them, but nothing enforces consistent use of those, even within a single sheet. Some particular feature often lives in column F for some rows, but the equivalent thing is in column H for other rows, for example. Contrast this with a CSV file or an SQL table; for these latter formats, while all the data in a column is not necessarily good data, it generally must pertain to the same feature.

Another danger of spreadsheets is not around data ingestion, per se, at all. Computation within spreadsheets is spread among many cells in no obvious or easily inspectable order, leading to numerous large-scale disasterous consequences (loss of billions in financial transaction; a worldwide economic planning debacle; a massive failure of Covid-19 contact tracing in the UK). The European Spreadsheet Risks Interest Group is an entire organization devoted to chronicling such errors. They present a number of lovely quotes, including this one:

There is a literature on denial, which focuses on illness and the fact that many people with terminal illnesses deny the seriousness of their condition or the need to take action. Apparently, what is very difficult and unpleasant to do is difficult to contemplate. Although denial has only been studied extensively in the medical literature, it is likely to appear whenever required actions are difficult or onerous. Given the effortful nature of spreadsheet testing, developers may be victims of denial, which may manifest itself in the form of overconfidence in accuracy so that extensive testing will not be needed. –Ray Panko, 2003

In procedural programming (including object-oriented programming), actions flow sequentially through code, with clear locations for branches or function calls; even in functional paradigms, compositions are explicitly stated. In spreadsheets it is anyone's guess what computation depends on what else, and what data ranges are actually included. Errors can occasionally be found accidentally, but program analysis and debugging are *nearly* impossible. Users who know only, or mostly, spreadsheets will likely object that *some* tools exist to identify dependencies within a spreadsheet; this is technically true in the same sense as that many goods transported by freight train could also be carried on a wheelbarrow.

Moreover, every cell in a spreadsheet can have a different data type. Usually the type is assigned by heuristic guesses within the spreadsheet interface. These are highly sensitive to the exact keystrokes used, the order cells are entered, whether data is copy/pasted between blocks, and numerous other things that are both hard to predict and that change between every version of every spreadsheet software program. Infamously, for example, Excel interprets the gene name 'SEPT2' (Septin 2) as a date (at least in a wide range of versions). Compounding the problem, the interfaces of spreadsheets make determining the data type for a given cell uncomfortably difficult.

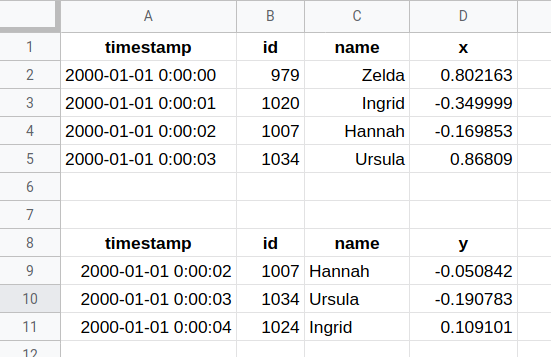

Let us start with an example. The screenshot below is of a commonplace and ordinary looking spreadsheet. Yes, some values are not aligned in their cells exactly consistently, but that is purely an aesthetic issue. The first problem that jumps out at us is the fact that one sheet is being used to represent two different (in this case related) tables of data. Already this is going to be difficult to make tidy.

Image: Excel Pitfalls

If we simply tell Pandas (or specifically the supporting openpyxl library) to try to make sense of this file, it makes a sincere effort and applies fairly intelligent heuristics. It does not crash, to its credit. Other DataFrame libraries will be similar, with different quirks you will need to learn. But what went wrong that we can see initially?

# Default engine `xlrd` might have bug in Python 3.9

pd.read_excel('data/Excel-Pitfalls.xlsx',

sheet_name="Dask Sample", engine="openpyxl")

| timestamp | id | name | x | |

|---|---|---|---|---|

| 0 | 2000-01-01 00:00:00 | 979 | Zelda | 0.802163 |

| 1 | 2000-01-01 0:00:01 | 1019.5 | Ingrid | -0.349999 |

| 2 | 2000-01-01 00:00:02 | 1007 | Hannah | -0.169853 |

| 3 | 2000-01-01 00:00:03 | 1034 | Ursula | 0.86809 |

| 4 | timestamp | id | name | y |

| 5 | 2000-01-01 00:00:02 | 1007 | Hannah | -0.050842 |

| 6 | 2000-01-01 00:00:03 | 1034 | Ursula | -0.190783 |

| 7 | 2000-01-01 00:00:04 | 1024 | Ingrid | 0.109101 |

Right away we can notice that the id column contains a value 1019.5 that was invisible in the

spreadsheet display. Whether that column is intended as a floating-point or an integer is not obvious at this

point.

Moreover, notice that visually the date on that same row looks slightly wrong. We will come back to this.

As a first step, we can, with laborious manual intervention, pull out the two separate tables we actually care about. Pandas is actually a little bit too smart here—it will, by default, ignore the data typing actually in the spreadsheet and do inference similar to what it does with a CSV file. For this purpose, we tell it to use the data type actually stored by Excel. Pandas' inference is not a panacea, but it is a useful option at times (it can fix some, but not all, of the issues we note below; however, other things are made worse). For the next few paragraphs, we wish to see the raw data types stored in the spreadsheet itself.

df1 = pd.read_excel('data/Excel-Pitfalls.xlsx',

nrows=5, dtype=object, engine="openpyxl")

df1.loc[:2] # Just look at first few rows

| timestamp | id | name | x | |

|---|---|---|---|---|

| 0 | 2000-01-01 00:00:00 | 979 | Zelda | 0.802163 |

| 1 | 2000-01-01 00:00:01 | 1019.5 | Ingrid | -0.349999 |

| 2 | 2000-01-01 00:00:02 | 1007 | Hannah | -0.169853 |

We can read the second implicit table as well by using the pd.read_excel() parameter

skiprows.

pd.read_excel('data/Excel-Pitfalls.xlsx', skiprows=7, engine="openpyxl")

| timestamp | id | name | y | |

|---|---|---|---|---|

| 0 | 2000-01-01 00:00:02 | 1007 | Hannah | -0.050842 |

| 1 | 2000-01-01 00:00:03 | 1034 | Ursula | -0.190783 |

| 2 | 2000-01-01 00:00:04 | 1024 | Ingrid | 0.109101 |

If we look at the data types read in, we will see they are all Python objects to preserve the various cell types. But let us look more closely at what we actually have.

df1.dtypes

timestamp datetime64[ns] id object name object x object dtype: object

The timestamps in this particular small example are all reasonable to parse with Pandas. But real-life spreadsheets often provide something much more ambiguous, often impossible to parse as dates. Look above at the screenshot of a spreadsheet to notice that the data type is invisible in the spreadsheet itself. We can find the Python data type of the generic object stored in each cell.

# Look at the stored data type of each cell

tss = df1.loc[:2, 'timestamp']

for i, ts in enumerate(tss):

print(f"TS {i}: {ts}\t{ts.__class__.__name__}")

TS 0: 2000-01-01 00:00:00 Timestamp TS 1: 2000-01-01 00:00:01 Timestamp TS 2: 2000-01-01 00:00:02 Timestamp

The Pandas to_datetime() function is idempotentidempotent and would have run if

we had

not specifically disabled it in by using dtype=object in the pd.read_excel() call.

However,

many spreadsheets are far messier, and the conversion will simply not succeed, producing an object

column

in any case. Particular cells in a column might contain numbers, formulae, or strings looking nothing like dates

(or

sometimes strings looking just enough like date string that a human, but not a machine, might guess the intent;

say

'Decc 23,, 201.9').

Let us look at using pd.to_datetime().

pd.to_datetime(tss)

0 2000-01-01 00:00:00 1 2000-01-01 00:00:01 2 2000-01-01 00:00:02 Name: timestamp, dtype: datetime64[ns]

Other columns pose a similar difficulty. The values that look identical in the spreadsheet view of the

id column are actually a mixture of integers, floating-point numbers, and strings. It is

conceivable that such was the intention, but in practice it is almost always an accidental result of

the ways

that spreadsheets hide information from their users. By the time these data sets arrive on your data science

desk,

they are merely messy, and the causes are lost in the sands of time. Let us look at the data types in the

id column.

# Look at the stored data type of each cell

ids = df1.loc[:3, 'id']

for i, id_ in enumerate(ids):

print(f"id {i}: {id_}\t{id_.__class__.__name__}")

id 0: 979 int id 1: 1019.5 float id 2: 1007 int id 3: 1034 str

Of course tools like Pandas can type cast values subsequent to reading them, but we require domain-specific

knowledge

of the data set to know what cast is appropriate. Let us cast data using the .astype() method:

ids.astype(int)

0 979 1 1019 2 1007 3 1034 Name: id, dtype: int64

Putting together the cleanup we mention, we might carefully type our data in a manner similar to the following.

# Only rows through index `3` are useful

# We are casting to more specific data types

# based on domain and problem knowledge

df1 = df1.loc[0:3].astype(

{'id': np.uint16,

'name': pd.StringDtype(),

'x': float})

# datetimes require conversion function, not just type

df1['timestamp'] = pd.to_datetime(df1.timestamp)

print(df1.dtypes)

timestamp datetime64[ns] id uint16 name string x float64 dtype: object

df1.set_index('timestamp')

| id | name | x | |

|---|---|---|---|

| timestamp | |||

| 2000-01-01 00:00:00 | 979 | Zelda | 0.802163 |

| 2000-01-01 00:00:01 | 1019 | Ingrid | -0.349999 |

| 2000-01-01 00:00:02 | 1007 | Hannah | -0.169853 |

| 2000-01-01 00:00:03 | 1034 | Ursula | 0.868090 |

What makes spreadsheets harmful is not principally their underlying data formats. Non-ancient versions of Excel (.xlsx), LibreOffice (OpenDocument, .ods), and Gnumeric (.gnm) have all adopted a similar format at the byte level. That is, they all store their data in XML formats, then compress those to save space. As I mentioned, this is slower than other approaches, but that concern is secondary.

If one of these spreadsheet formats were used purely as an exchange format among structured tools, they would be perfectly suitable to preserve and represent data. It is instead the social and user interface (UI) elements of spreadsheets that make them dangerous. The "tabular" format of Excel combines the worst elements of untyped CSV and strongly typed SQL databases. Rather than assign a data type by column/feature, it allows type assignments per cell.

Per-cell typing is almost always the wrong thing to do for any data science purpose. It neither allows flexible decisions by programming tools (either using inference or type declaration APIs) nor does it enforce consistency of different values that should belong to the same feature at the time data is stored. Moreover, the relatively free-form style of entry in the user interfaces of spreadsheets does nothing to guide users away from numerous kinds of entry errors (not only data typing, but also various misalignments within the grid, accidental deletions or insertions, and so on). Metaphorically, the danger posed by spreadsheet UIs resembles the concept in tort law of an "attractive nuisance"—they do not directly create the harm, but they make harm exceedingly likely with minor inattention.

Unfortunately, there do not currently exist any general purpose data entry tools in widespread use. Database entry forms could serve the purpose of enforcing structure on data entry, but they are limited for non-programmatic data exploration. Moreover, use of structured forms, whatever the format where the data might be subsequently stored, currently requires at least a modicum of software development effort, and many general users of spreadsheets lack this ability. Something similar to a spreadsheet, but that allowed locking data type constraints on columns, would be a welcome addition to the world. Perhaps one or several of my readers will create and popularize such tools.

For now, the reality is that many users will create spreadsheets that you will need to extract data from as a data scientist. This will inevitably be more work for you than if you were provided a different format. But think carefully about the block regions and tabs/sheets that are of actual relevance, to the problem-required data types for casts, and about how to clean unprocessable values. With effort the data will enter your data pipelines.

We can turn now to well structured and carefully date typed formats; those stored in relational databases.

At the time, Nixon was normalizing relations with China. I figured that if he could normalize relations, then so could I.

–E. F. Codd (inventor of relational database theory)

Concepts:

Relational databases management systems (RDBMS) are enormously powerful and versatile. For the most part, their requirement of strict column typing and frequent use of formal foreign keys and constraints is a great boon for data science. While specific RDBMS's vary greatly in how well normalized, indexed, and designed they are—not every organization has or utilizes a database engineer specifically—even somewhat informally assembled databases have many desirable properties for data science. Not all relational databases are tidy, but they all take you several large steps in that direction.

Working with relational databases requires some knowledge of Structured Query Language (SQL). For small data, and perhaps for medium-sized data, you can get by with reading entire tables into memory as data frames. Operations like filtering, sorting, grouping, and even joins can be performed with data frame libraries. However, it is much more efficient if you are able to do these kinds of operations directly at the database level; it is an absolute necessity when working with big data. A database that has millions or billions of records, distributed across tens or hundreds of related tables, can itself quickly produce the hundreds of thousands of rows (tuples) that you need for the task at hand. But loading all of these rows into memory is either unnecessary or simply impossible.

There are many excellent books and tutorials on SQL. I do not have a specific one to recommend over others, but

finding a suitable text to get up to speed—if you are not already—is not difficult. The general concepts of

GROUP BY, JOIN, and WHERE clauses are the main things you should know as

a data

scientist. If you have a bit more control over the database you pull data from, knowing something about how to

intelligently index tables, and optimize slow queries by reformulation and looking at EXPLAIN

output, is

helpful. However, it is quite likely that you, as a data scientist, will not have full access to database

administration. If you do have such access: be careful!

For this book, I use a local PostgreSQL server to illustrate APIs. I find that PostgreSQL is

vastly

better at query optimization than is its main open source competitor, MySQL. Both behave

equally well

with careful index tuning, but generally PostgreSQL is much faster for queries that must be optimized on an ad

hoc

basis by the query planner. In general, almost all of the APIs I show will be nearly identical across drivers in

Python or in R (and in most other languages) whether you use PostgreSQL, MySQL, Oracle DB, DB2, SQL Server, or

any

other RDBMS. The Python DB-API, in particular, is well standardized across drivers. Even the single-file RDBMS,

SQLite3, which is included in the Python standard library is almost DB-API compliant (and

.sqlite is a very good storage format).

Within the setup.py module that is loaded by each chapter and is available within the source code

repository, some database setup is performed. If you run some of the functions contained therein, you will be

able to

create generally the same configuration on your system as I have on the one where I am writing this. Actual

installation of an RDBMS is not addressed in this book; see the instructions accompanying your database

software. But

a key, and simple step, is creating a connection to the DB.

# Similar with adapter other than psycopg2

con = psycopg2.connect(database=db, host=host,

user=user, password=pwd)

This connection object will be used in subsequent code in this book. We also create an engine

object

that is an SQLAlchemy wrapper around a connection that adds some enhancements. Some libraries

like

Pandas require using an engine rather than only a connection. We can create that as follows:

engine = create_engine(

f'postgresql://{user}:{pwd}@{host}:{port}/{db}')

I used the Dask data created earlier in this chapter to populate a table with the following schema. These metadata values are defined in the RDBMS itself. Within this section, we will work with the elaborate and precise data types that relational databases provide.

| Column | Data Type | Data Width |

|---|---|---|

| index | integer | 32 |

| timestamp | timestamp without time zone | None |

| id | smallint | 16 |

| name | character | 10 |

| x | numeric | 6 |

| y | real | 24 |

This is the same data structure created in the previous Dask discussion, but I have somewhat arbitrarily

imposed more

specific data types on the fields. The PostgreSQL "data width" shown is a bit odd; it mixes bit length with byte

length depending on the type. Moreover, for the floating-point y it shows the bit length of the

mantissa,

not of the entire 32-bit memory word. But in general we can see that different columns have different specific

types.

When designing tables, database engineers generally try to choose data widths that are sufficient for the purpose, but also as small as the requirement allows. If you need to store billions of person ages, for example, a 256-bit integer could certainly hold those numbers, but an 8-bit integer can also hold all the values that can occur using 1/32nd as much storage space.

Using the Python DB-API loses some data type information. It does pretty well, but Python does not

have a

full range of native types. The fractional numbers are accurately stored as either Decimal or

native

floating-point, but the specific bit lengths are lost. Likewise, the integer is a Python integer of unbounded

size.

The name strings are always 10 characters long, but for most purposes we probably want to apply

str.rstrip() (strip whitespace at right end) to take off the surrounding whitespace.

# Function connect_local() spelled out in chapter 4 (Anomaly Detection)

con, engine = connect_local()

cur = con.cursor()

cur.execute("SELECT * FROM dask_sample")

pprint(cur.fetchmany(2))

[(3456,

datetime.datetime(2000, 1, 2, 0, 57, 36),

941,

'Alice ',

Decimal('-0.612'),

-0.636485),

(3457,

datetime.datetime(2000, 1, 2, 0, 57, 37),

1004,

'Victor ',

Decimal('0.450'),

-0.68771815)]

Unfortunately, we lose even more data type information using Pandas (at least as of Pandas 1.0.1 and SQLAlchemy 1.3.13, current as of this writing). Pandas is able to use the full type system of NumPy, and even adds a few more custom types of its own. This richness is comparable to—but not necessarily identical with—the type systems provided by RDBMS's (which, in fact, vary from each other as well, especially in extension types). However, the translation layer only casts to basic string, float, int, and date types.

Let us read a PostgreSQL table into Pandas, and then examine what native data types were utilized to approximate that SQL data.

df = pd.read_sql('dask_sample', engine, index_col='index')

df.tail(3)

| timestamp | id | name | x | y | |

|---|---|---|---|---|---|

| index | |||||

| 5676 | 2000-01-02 01:34:36 | 1041 | Charlie | -0.587 | 0.206869 |

| 5677 | 2000-01-02 01:34:37 | 1017 | Ray | 0.311 | 0.256218 |

| 5678 | 2000-01-02 01:34:38 | 1036 | Yvonne | 0.409 | 0.535841 |

The specific dtypes within the DataFrame are:

df.dtypes

timestamp datetime64[ns] id int64 name object x float64 y float64 dtype: object

Although it is a bit more laborious, we can combine these techniques and still work with our data within a friendly data frame, but using more closely matched types (albeit not perfectly matched to the DB). The two drawbacks here are:

object columnsLet us endeavor to choose better data types for our data frame. We probably need to determine the precise types

from

the documentation of our RDBMS, since few people have the PostgreSQL type codes memorized. The DB-API cursor

object

has a .description attribute that contains column type codes.

cur.execute("SELECT * FROM dask_sample")

cur.description

(Column(name='index', type_code=23), Column(name='timestamp', type_code=1114), Column(name='id', type_code=21), Column(name='name', type_code=1042), Column(name='x', type_code=1700), Column(name='y', type_code=700))

We can introspect to see the Python types used in the results. Of course, these do not carry the bit lengths of

the

DB with them, so we will need to manually choose these. Datetime is straightforward enough to put into Pandas's

datetime64[ns] type.

rows = cur.fetchall() [type(v) for v in rows[0]]

[int, datetime.datetime, int, str, decimal.Decimal, float]

Working with Decimal numbers is tricker than other types. Python's standard library decimal module

complies with IBM’s General Decimal Arithmetic Specification;

unfortunately, databases do not. In particular, the IBM 1981 spec (with numerous updates) allows each

operation to be performed within some chosen "decimal context" that gives precision, rounding rules,

and

other things. This is simply different from having a decimal precision per column, with no

specific

control of rounding rules. We can usually ignore these nuances; but when they bite us, they can bite hard. The

issues

arise more in civil engineering and banking/finance than they do with data science as such, but these are

concerns to

be aware of.

In the next cell, we cast several columns to specific numeric data types with specific bit widths.

# Read the data with no imposed data types

df = pd.DataFrame(rows,

columns=[col.name for col in cur.description],

dtype=object)

# Assign specific int or float lengths to some fields

types = {'index': np.int32, 'id': np.int16, 'y': np.float32}

df = df.astype(types)

# Cast the Python datetime to a Pandas datetime

df['timestamp'] = pd.to_datetime(df.timestamp)

df.set_index('index').head(3)

| timestamp | id | name | x | y | |

|---|---|---|---|---|---|

| index | |||||

| 3456 | 2000-01-02 00:57:36 | 941 | Alice | -0.612 | -0.636485 |

| 3457 | 2000-01-02 00:57:37 | 1004 | Victor | 0.450 | -0.687718 |

| 3458 | 2000-01-02 00:57:38 | 980 | Quinn | 0.552 | 0.454158 |

We can verify those data types are used.

df.dtypes

index int32 timestamp datetime64[ns] id int16 name object x object y float32 dtype: object

Panda's "object" type hides the differences of the underlying classes of the Python objects stored. We can look at those more specifically.

pprint({repr(x): x.__class__.__name__

for x in df.reset_index().iloc[0]})

{"'Alice '": 'str',

'-0.636485': 'float32',

'0': 'int64',

'3456': 'int32',

'941': 'int16',

"Decimal('-0.612')": 'Decimal',

"Timestamp('2000-01-02 00:57:36')": 'Timestamp'}

For the most part, the steps for reading in SQL data in R are similar to those in Python. And so are the pitfalls around getting data types just right. We can see that the data types are the same rough approximations of the actual database types as Pandas produced. Obviously, in real code you should not specify passwords as literal values in the source code, but use some tool for secrets management.

%%capture --no-stdout err

%%R

require("RPostgreSQL")

drv <- dbDriver("PostgreSQL")

con <- dbConnect(drv, dbname = "dirty",

host = "localhost", port = 5432,

user = "cleaning", password = "data")

sql <- "SELECT id, name, x, y FROM dask_sample LIMIT 3"

data <- tibble(dbGetQuery(con, sql))

data

# A tibble: 3 x 4

id name x y

<int> <chr> <dbl> <dbl>

1 941 "Alice " -0.612 -0.636

2 1004 "Victor " 0.45 -0.688

3 980 "Quinn " 0.552 0.454

What is interesting to look at is that we might produce data frames that are not directly database tables (nor simply the first few rows, as in examples here), but rather some more complex manipulation or combination of that data. Joins are probably the most interesting case here since they take data from multiple tables. But grouping and aggregation is also frequently useful, and might reduce a million rows to a thousand summary descriptions, for example, which might be our goal.

%%capture --no-stdout err

%%R

sql <- "SELECT max(x) AS max_x, max(y) AS max_y,

name, count(name)

FROM dask_sample

WHERE id > 1050

GROUP BY name

ORDER BY count(name) DESC

LIMIT 12;"

# Here we simply retrieve a data.frame

# rather than convert to tibble

dbGetQuery(con, sql)

max_x max_y name count 1 0.733 0.7685581 Hannah 10 2 0.469 0.8493844 Norbert 10 3 0.961 0.7355076 Wendy 9 4 0.950 0.6730366 Quinn 8 5 0.892 0.8534938 Michael 7 6 0.772 0.9892333 Yvonne 7 7 0.958 0.8597920 Patricia 6 8 0.953 0.8659175 Ingrid 6 9 0.998 0.9807807 Oliver 6 10 0.050 0.5018596 Laura 6 11 0.399 0.8085724 Alice 5 12 0.604 0.8264008 Kevin 5

For the following example, I started with a data set that described Amtrak train stations in 2012. Many of the

fields

initially present were discarded, but some others were manipulated to illustrate some points. Think of this as

"fake

data" even though it is derived from a genuine data set. In particular, the column Visitors is

invented

whole cloth; I have never seen visitor count data, nor do I know if it is collected anywhere. It is just numbers

that

will have a pattern.

amtrak = pd.read_sql('bad_amtrak', engine)

amtrak.head()

| Code | StationName | City | State | Visitors | |

|---|---|---|---|---|---|

| 0 | ABB | Abbotsford-Colby | Colby | WI | 18631 |

| 1 | ABE | Aberdeen | Aberdeen | MD | 12286 |

| 2 | ABN | Absecon | Absecon | NJ | 5031 |

| 3 | ABQ | Albuquerque | Albuquerque | NM | 14285 |

| 4 | ACA | Antioch-Pittsburg | Antioch | CA | 16282 |

On the face of it—other than the telling name of the table we read in—nothing looks out of place. Let us look for problems. Notice that the tests below will, in a way, be anomaly detection, which is discussed in a later chapter. However, the anomalies we find are specific to SQL data typing.

String fields in RDBMS's are prone to truncation if specific character lengths are given. Modern database

systems

also have a VARCHAR or TEXT type for unlimited length strings, but often specific

lengths

are used in practice. To a certain degree, database operations can be more efficient with known text lengths, so

the

choice is not simple foolishness. But whatever the reason, you will find such fixed lengths frequently in

practice. In

particular, the StationName column is defined as CHAR(20). The question is: is that a

problem?

Knowing the character length will not automatically answer the question we care about. Perhaps Amtrak regulation requires a certain length of all station names. This is domain-specific knowledge that you may not have as a data scientist. In fact, the domain experts may not have it either, because it has not been analyzed or because rules have changed over time. Let us analyze the data itself.

Moreover, even if a database field is currently variable length or very long, it is quite possible that a column was altered over the life of a DB, or that a migration occurred. Unfortunately, multiple generations of old data that may each have been corrupted in their own ways can obscure detection.

One place you may encounter this problem data history is with dates in older data sets where two digit years

were

used. The "Y2K" issue had to be addressed two decades ago for active database systems—for example, I spent the

calendar year of 1998 predominantly concerned with this issue—but there remain some infrequently accessed legacy

data

stores that will fail on this ambiguity. If the character string '34' is stored in a column named

YEAR,

does it refer to something that happened in the 20th century or an anticipated future event a decade after this

book

is being written? Some domain knowledge is needed to answer this.

Some rather concise Pandas code can tell us something useful. A first step is cleaning the padding in the fixed length character field. The whitespace padding is not generally useful in our code. After that we can look at the length of each value, count the number of records per length, and sort by those lengths, to produce a histogram.

amtrak['StationName'] = amtrak.StationName.str.strip() hist = amtrak.StationName.str.len().value_counts().sort_index() hist

4 15

5 46

6 100

7 114

...

17 15

18 17

19 27

20 116

Name: StationName, Length: 17, dtype: int64

The pattern is even more striking if we visualize it. Clearly station names bump up against that 20 character width. This is not quite yet a smoking gun, but it is very suggestive.

hist.plot(kind='bar',

title="Lengths of Station Names")

plt.savefig('img/(Ch01)Lengths of Station Names')

We want to be careful not to attribute an underlying phenomenon as a data artifact. For example, in preparing this section, I started analyzing a collection of Twitter 2015 tweets. Those naturally form a similar pattern of "bumping up" against 140 characters—but I realized that they do this because of a limit in the accurate underlying data, not as a data artifact. However, the Twitter histogram curve looks similar to that for station names. I am aware that Twitter doubled its limit in 2018; I would expect an aggregated collection over time to show asymptotes at both 140 and 280, but as a "natural" phenomenon.

If the character width limit changed over the history of our data, we might see a pattern of multiple soft

limits.

These are likely to be harder to discern, especially if those limits are significantly larger than 20 characters

to

start with. Before we absolutely conclude that we have a data artifact rather than, for example, an

Amtrak

naming rule, let us look at the concrete data. This is not impractical when we start with a thousand rows, but

it

becomes more difficult with a million rows. Using Pandas' .sample() method is often a good way to

view a

random subset of rows matching some filter, but here we just display the first and last few.

amtrak[amtrak.StationName.str.len() == 20]

| Code | StationName | City | State | Visitors | |

|---|---|---|---|---|---|

| 28 | ARI | Astoria (Shell Stati | Astoria | OR | 27872 |

| 31 | ART | Astoria (Transit Cen | Astoria | OR | 22116 |

| 42 | BAL | Baltimore (Penn Stat | Baltimore | MD | 19953 |

| 50 | BCA | Baltimore (Camden St | Baltimore | MD | 32767 |

| ... | ... | ... | ... | ... | ... |

| 965 | YOC | Yosemite - Curry Vil | Yosemite National Park | CA | 28352 |

| 966 | YOF | Yosemite - Crane Fla | Yosemite National Park | CA | 32767 |

| 969 | YOV | Yosemite - Visitor C | Yosemite National Park | CA | 29119 |

| 970 | YOW | Yosemite - White Wol | Yosemite National Park | CA | 16718 |

116 rows × 5 columns

It is reasonable to conclude from our examined data that truncation is an authentic problem here. Many of the samples have words terminated in their middle at character length. Remediating it is another decision and more effort. Perhaps we can obtain the full texts as a followup; if we are lucky the prefixes will uniquely match full strings. Of course, quite likely, the real data is just lost. If we only care about uniqueness, this is likely not to be a big problem (the three letter codes are already unique). However, if our analysis concerns the missing data itself we may not be able to proceed at all. Perhaps we can decide in a problem-specific way that prefixes nonetheless are a representative sample of what we are analyzing.

A similar issue arises with numbers of fixed lengths. Floating-point numbers might lose desired precision, but

integers might wrap and/or clip. We can examine the Visitors column and determine that it stores a

16-bit

SMALLINT. Which is to say, it cannot represent values greater than 32,767. Perhaps that is more

visitors

than any single station will plausibly have. Or perhaps we will see data corruption.

max_ = amtrak.Visitors.max()

amtrak.Visitors.plot(

kind='hist', bins=20,

title=f"Visitors per station (max {max_})")

plt.savefig(f"img/(Ch01)Visitors per station (max {max_})")

In this case, the bumping against the limit is a strong signal. An extra hint here is the specific limit reached. It is one of those special numbers you should learn to recognize. Signed integers of bit-length N range from $-2^{N-1}$ up to $2^{N-1}-1$. Unsigned integers range from $0$ to $2^N$. 32,767 is $2^{16}-1$. However, for various programming reasons, numbers one (or a few) shy of a data type bound also frequently occur. In general, if you ever see a measurement that is exactly one of these bounds, you should take a second look and think about whether it might be an artifactual number rather than a genuine value. This is a good rule even outside the context of databases.

A possibly more difficult issue to address is when values wrap instead. Depending on the tools you use, large positive integers might wrap around to negative integers. Many RDBMS's—including PostgreSQL—will simply refuse transactions with unacceptable values rather than allow them to occur. But different systems vary. Wrapping on sign is obvious in the case of counts that are non-zero by their nature, but for values where both positive and negative numbers make sense, detection is harder.

For example, in this Pandas Series example, which is cast to a short integer type, we see values around positive and negative 15 thousand, both as genuine elements and as artifacts of a type cast.

ints = pd.Series(

[100, 200, 15_000, 50_000, -15_000, -50_000])

ints.astype(np.int16)

0 100 1 200 2 15000 3 -15536 4 -15000 5 15536 dtype: int16

In a case like this, we simply need to acquire enough domain expertise to know whether the out of bounds values that might wrap can ever be sensible measurements. I.e. is 50,000 reasonable for this hypothetical measure? If all reasonable observations are of numbers in the hundreds, wrapping at 32 thousand is not a large concern. It is conceivable that some reasonable value got there as a wrap from an unreasonable one; but wrong values can occur for a vast array of reasons, and this would not be an unduly large concern.

Note that integers and floating-point numbers only come, on widespread computer architectures, in sizes of 8, 16, 32, 64, and 128-bits. For integers those might be signed or unsigned, which would halve or double the maximum number representable. These maximum values representable within these different bit widths are starkly different from each other. A rule of thumb, if you can choose the integer representation, is to leave an order-of-magnitude padding from the largest magnitude you expect to occur. However, sometimes even an order-of-magnitude does not set a good bound on unexpected (but accurate) values.

For example, in our hypothetical visitor count, perhaps a maximum of around 20k was reasonably anticipated, but over the years, that got as high as 35k, leading to the effect we see in the plot (of hypothetical data). Allowing for 9,223,372,036,854,775,807 $(2^{63}-1)$ visitors to a station might have seemed like unecessary overhead to the initial database engineers. However, a 32-bit integer, with a maximum of 2,147,483,647 $(2^{31}-1)$ would have been a better choice, even though the actual maximum remains far larger than will ever be observed.

Let us turn now to some other data formats you are likely to work with, generally binary data formats, often used for scientific requirements.

Let a hundred flowers bloom; let a hundred schools of thought contend.

–Confucian saying

Concepts:

A variety of data formats that you may encounter can be used for holding tabular data. For the most part these do not introduce any special new cleanliness concerns that we have not addressed in earlier sections. Properties of the data themselves are discussed in later chapters. The data type options vary between storage formats, but the same kinds of general concerns that we discussed with RDBMS's apply to all of them. In the main, from the perspective of this book, these formats simply require somewhat different APIs to get at their underlying data, but all provide data types per column. The formats addressed herein are not an exhaustive list, and clearly new ones may arise or increase in significance after the time of this writing. But the principles of access should be similar for formats not discussed.

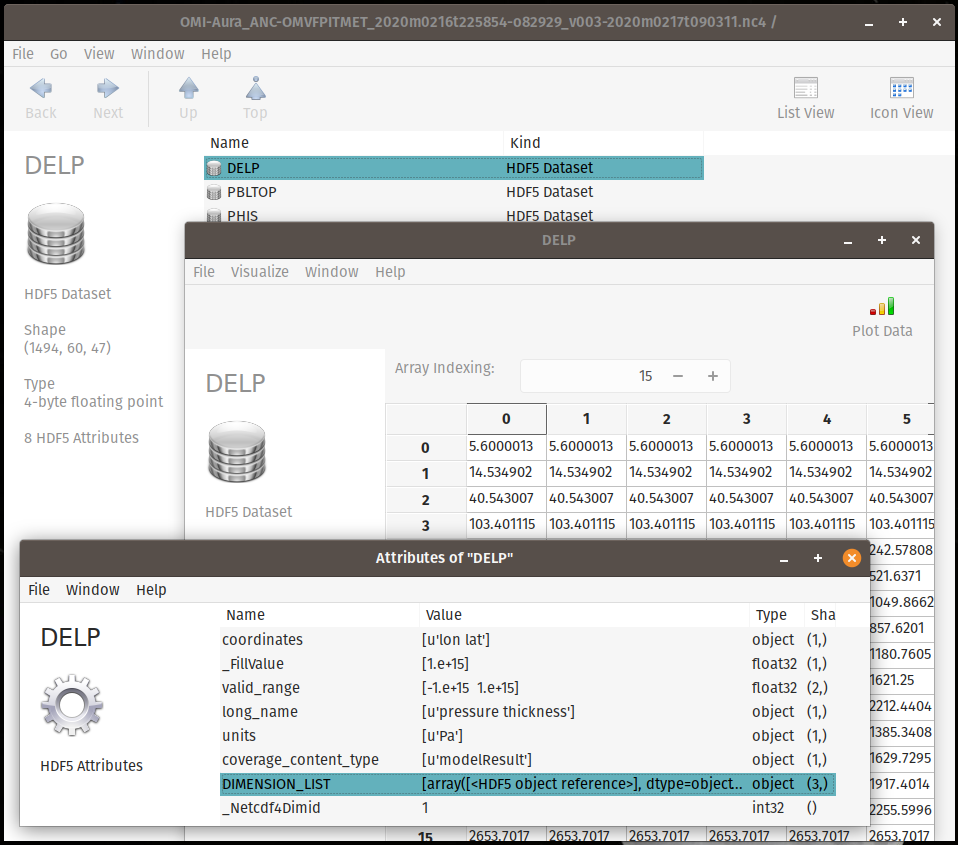

The closely related formats HDF5 and NetCDF (discussed below) are largely interoperable, and both provide ways of storing multiple arrays, each with metadata associated and also allowing highly dimensional data, not simply tabular 2-D arrays. Unlike with the data frame model, arrays within these scientific formats are of homogeneous type throughout. That is, there is no mechanism (by design) to store a text column and a numeric column within the same object, nor even numeric columns of different bit-widths. However, since they allow multiple arrays in the same file, full generality is available, just in a different way than within the SQL or data frame model.

SQLite (discussed below) is a file format that provides a relational database, consisting potentially of multiple tables, within a single file. It is extremely widely used, being present and used everywhere from on every iOS and Android device up to the largest supercomputer clusters. An interface for SQLite is part of the Python standard library and is available for nearly all other programming languages.

Apache Parquet (discussed below) is a column-oriented data store. What this amounts to is simply a way to store data frames or tables to disk, but in a manner that optimizes common operations that typically vectorize along columns rather than along rows. A similar philosophy motivates columnar RDBMS's like Apache Cassandra and MonetDB, both of which are SQL databases, simply with different query optimization possibilities. kdb+ is an older, and non-SQL, approach to a similar problem. PostgreSQL and MariaDBmariadb also both have optional storage formats that use column organization. Generally these internal optimizations are not direct concerns for data science, but Parquet requires its own non-SQL APIs.

mysql for

compatibility.

There are a number of binary data formats that are reasonably widely used, but I do not specifically discuss in this book. Many other formats have their own virtues, but I have attempted to limit the discussion to the handful that I feel you are most likely to encounter in regular work as a data scientist. Some additional formats are listed below, with characterization mostly adapted from their respective home pages. You can see in the descriptions which discussed formats they most resemble, and generally the identical data integrity and quality concerns apply as in the formats I discuss. Differences are primarily in performance characteristics: how big are the files on disk, how fast can they be read and written under different scenarios, and so on.

Apache Arrow is a development platform for in-memory analytics. It specifies a standardized language-independent columnar memory format for flat and hierarchical data, organized for efficient analytic operations on modern hardware.

bcolz provides columnar, chunked data containers that can be compressed either in-memory and on-disk. Column storage allows for efficiently querying tables, as well as for cheap column addition and removal. It is based on NumPy, and uses it as the standard data container to communicate with bcolz objects, but it also comes with support for import/export facilities to/from HDF5/PyTables tables and pandas dataframes.

Zarr provides classes and functions for working with N-dimensional arrays that behave like NumPy arrays but whose data is divided into chunks and each chunk is compressed. If you are already familiar with HDF5 then Zarr arrays provide similar functionality, but with some additional flexibility.

There is a slightly convoluted history of the Hierarchical Data Format, which was begun by the National Center for Supercomputing Applications (NCSA) in 1987. HDF4 was significantly over-engineered, and is far less widely used now. HDF5 simplified the file structure of HDF4. It consists of datasets, which are multidimensional arrays of a single data type, and groups, which are container structures holding datasets and other groups. Both groups and datasets may have attributes attached to them, which are any pieces of named metadata. What this, in effect, does is emulate a filesystem within a single file. The nodes or "files" within this virtual filesystem are array objects. Generally, a single HDF5 file will contain a variety of related data for working with the same underlying problem.

The Network Common Data Form (NetCDF) is a library of functions for storing and retrieving array data. The project itself is nearly as old as HDF, and is an open standard that was developed and supported by a variety of scientific agencies. As of its version 4, it supports using HDF5 as storage backend; earlier versions used some other file formats, and current NetCDF software requires continued support for those older formats. Occasionally NetCDF-4 files do enough special things with their contents that reading them with generic HDF5 libraries is awkward.

Generic HDF5 files typically have an extension of .h5, .hdf5, .hdf, or

.he5. These should all represent the same binary format, and other extensions occur sometimes too.

Some

corresponding extensions for HDF4 also exist. Oddly, even though NetCDF can consist of numerous underlying file

formats, they all seem standardized on the .nc extension.